Executive Overview

Artificial intelligence is advancing at unprecedented speed, yet organisational maturity is not keeping pace. As systems evolve from automation to autonomous reasoning and action, many enterprises are discovering that increased capability does not automatically translate into increased accountability. When autonomy scales without corresponding governance, judgment, and oversight, organisations risk a form of cognitive regression: decisions accelerate, while understanding, traceability, and control erode.

This monograph examines why Human-in-the-Loop (HITL) must be understood not as a tactical review mechanism, but as a governance and assurance architecture for intelligent systems. It reframes Responsible AI from a policy aspiration into an operational discipline, demonstrating how ethical intent, risk governance, management-system assurance, and delivery execution can be integrated into a single, auditable operating model.

Written for leaders who carry formal accountability for AI outcomes, including board members, Audit and Risk Committees, senior technology executives, regulators, and practitioner-scholars, this paper argues that trust in AI cannot be assumed or declared. It must be engineered, measured, and sustained as autonomy increases.

This document provides an executive-level synthesis of the core principles for governing intelligent systems at scale. The full monograph expands these concepts into detailed architectural models, diagrams, delivery patterns, and implementation considerations, and is available on request.

1. From Automation to Responsibility Intelligence

1.1 The End of the Experimental Phase

Artificial intelligence has outgrown its experimental phase. AI systems now write software, optimise workflows, generate insights, and increasingly participate in operational decision-making once reserved for humans. In this environment, the distinction between “pilot” and “production” has effectively collapsed. The consequences of AI behaviour are no longer hypothetical; they are material, immediate, and often irreversible.

Many organisations assume that because AI capabilities appear sophisticated, their governance and judgment structures are equally mature. This assumption is frequently incorrect. As autonomy scales, a persistent truth becomes unavoidable: without continuous human judgment, contextual awareness, and ethical alignment, decision-making drifts beyond effective accountability. What appears as acceleration or technical sophistication can, in practice, represent cognitive devolution, organisations relying on increasingly powerful tools while exercising diminishing understanding, oversight, and control.

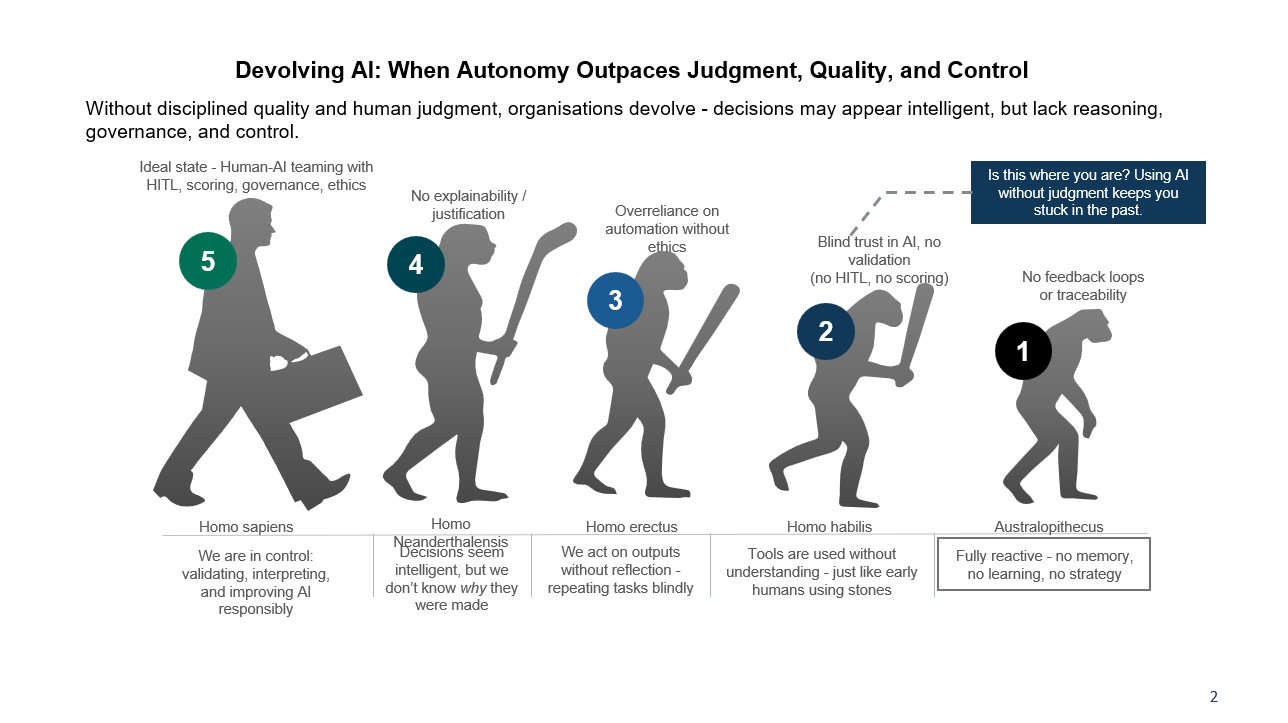

Figure 1: Devolving AI - When Autonomy Outpaces Judgment, Quality, and Control

Figure 1 illustrates how organisations can appear technologically advanced while operating at progressively weaker levels of governance maturity. As automation increases without Human-in-the-Loop oversight, explainability, and feedback, decision-making becomes faster but less accountable. The diagram reframes AI risk not as a technical failure, but as an organisational regression in which tools evolve faster than judgment.

The core challenge, therefore, is not the absence of principles or frameworks. It is the absence of operational coherence between ethical intent, governance control, assurance mechanisms, and day-to-day delivery.

1.2 The Illusion of AI Maturity

AI maturity is often measured by capability: model accuracy, automation coverage, or deployment velocity. This framing is misleading. Maturity is not defined by what AI systems can do, but by how effectively human judgment governs their use.

Organisations may appear technologically advanced while remaining cognitively constrained, mistaking automation for intelligence. Without explainability, feedback loops, and accountability mechanisms, AI systems can generate outputs faster while degrading learning, memory, and strategic control. The result is an illusion of progress where systems that look modern but behave primitively from a governance perspective.

True maturity emerges only when autonomy is conditional, explainable, and subject to human authority.

1.3 Two Enterprise Models: AI-Driven vs AI-Augmented

At the point where automation evolves into autonomous reasoning and action, organisations diverge into two fundamentally different delivery paradigms.

In AI-driven delivery, autonomy is prioritised, and governance is treated as secondary or retrospective. Human involvement is reduced to configuration, exception handling, or post-hoc review. Speed and scale dominate success metrics, while accountability becomes diffuse. These systems may perform efficiently in the short term, but they accumulate risk silently as decision logic evolves beyond consistent human understanding.

By contrast, AI-Augmented Engineering deliberately co-designs automation and human judgment. Governance, traceability, and Human-in-the-Loop oversight are embedded directly into delivery workflows. Autonomy is permitted to increase, but only in proportion to governance maturity. Accountability remains explicit, measurable, and enforceable.

The distinction is not technological. It is structural - the difference between autonomy that emerges implicitly and autonomy that is deliberately governed.

2. Agentic AI and the Accountability Boundary

As AI systems become agentic, capable of planning, reasoning, and orchestrating actions across workflows, accountability cannot be inferred solely from outcomes. It must be designed into the system.

AI-Augmented Engineering enables the responsible deployment of agentic AI by establishing a clear accountability boundary. Within this boundary, autonomy is conditional rather than absolute. Actions are governed by confidence thresholds, explainability requirements, policy constraints, and escalation rules. Human-in-the-Loop telemetry determines when systems may act independently and when human authorisation is mandatory.

This approach aligns with the risk-based logic articulated by the NIST AI Risk Management Framework, which emphasizes governed delegation, continuous measurement, and managed risk as foundational. Accountability remains traceable even as systems become increasingly autonomous.

By contrast, AI-driven models often enable agentic behaviour without embedding these controls, relying on retrospective review or infrastructure-level safeguards. Such approaches struggle to sustain trust, auditability, and regulatory confidence at scale.

3. The Three Governing Shifts

AI-enabled organisations must govern three dimensions that truly scale: velocity, autonomy, and trust. These dimensions are interdependent. Acceleration without alignment creates risk. Autonomy without oversight erodes accountability. Trust without evidence collapses under scrutiny.

Governing Velocity

Agile delivery optimised speed in human-centric systems. AI-Augmented Engineering introduces the obligation to govern velocity. As automation collapses the distance between intent and execution, Human-in-the-Loop oversight ensures that speed does not outpace accountability. Delivery remains fast, but it is also explainable and auditable.

Governing Autonomy

AI systems are evolving from retrieval to reasoning to generation. Each increase in cognitive capability introduces new risks and governance requirements. AI-Augmented Engineering treats autonomy as a design variable rather than an emergent side effect. Systems may act independently, but only within explicitly authorised limits.

Governing Trust

Trust cannot be assumed in autonomous systems. It must be engineered. Transparency, traceability, and assurance become system properties rather than aspirational values. Trust emerges not from promises, but from observable, auditable behaviour.

4. The Responsible AI Governance Stack

Responsible AI does not arise from a single framework or standard. It emerges from the integration of multiple layers, each operating at a different level of abstraction.

Ethical Intent - OECD Principles

The OECD Principles on Artificial Intelligence establish the global ethical baseline for trustworthy, human-centred AI. They define what AI systems should serve and why those values matter, emphasising fairness, transparency, robustness, and accountability.

Governance Control - NIST AI RMF

The NIST AI Risk Management Framework translates ethical intent into operational governance through its Map, Measure, Manage, and Govern functions, providing a continuous control loop for AI risk.

Management-System Assurance - ISO/IEC 42001

ISO/IEC 42001 establishes how responsibility is proven rather than asserted, requiring documented controls, monitoring, and continuous improvement.

Delivery Governance - CRISP-AIML

CRISP-AIML embeds ethical intent, governance controls, and assurance requirements directly into the AI delivery lifecycle, making responsibility a property of execution rather than an after-the-fact check.

Together, these layers form a coherent governance stack spanning intent, control, assurance, and execution.

5. From Governance to Operating Reality

Frameworks and standards matter only when they shape behaviour in live systems. The AI operating model is where Responsible AI becomes executable - where intent is converted into function and accountability becomes a property of everyday delivery.

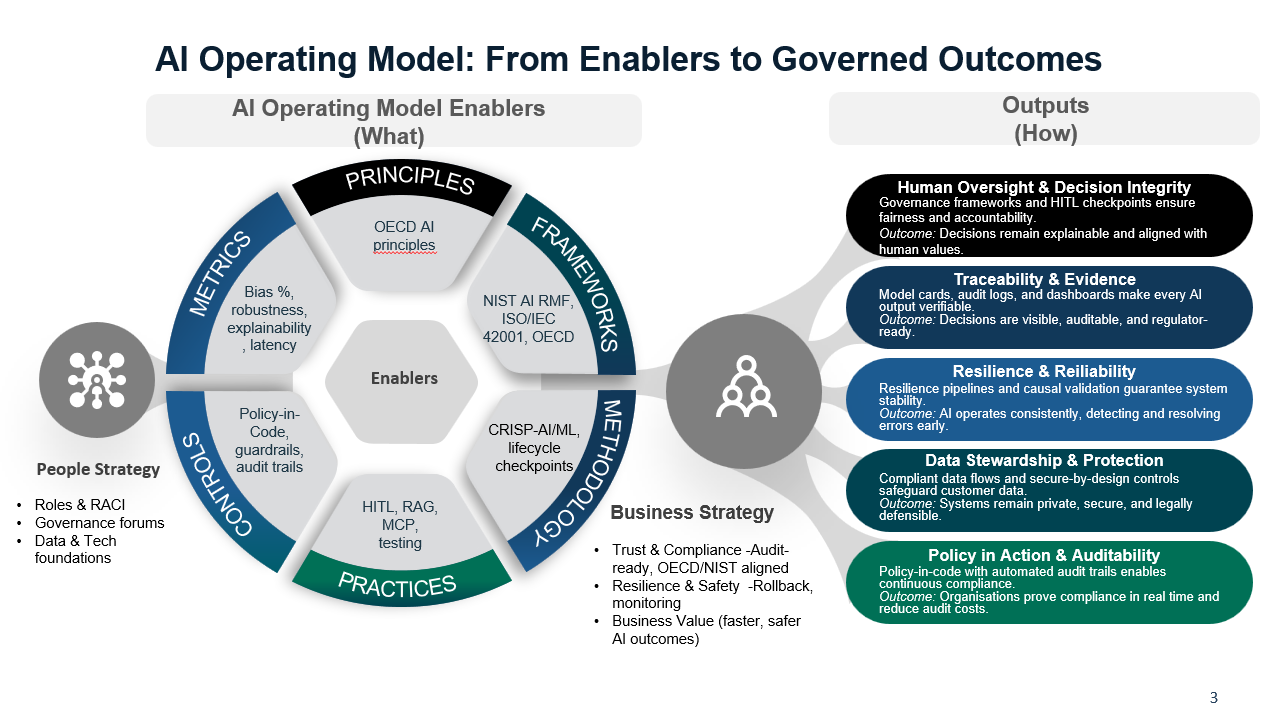

Figure 2: AI Operating Model: From Enablers to Governed Outcomes

Figure 2 illustrates how ethical principles (OECD), governance frameworks (NIST), delivery methodologies (CRISP-AIML), and Human-in-the-Loop practices integrate into a single operating model. Rather than treating governance as an external constraint, the model shows how policy, controls, metrics, and practices combine to produce governed outcomes: explainable decisions, traceability, resilience, data stewardship, and auditable compliance.

At this level, governance is no longer episodic. It is continuous. Ethical intent becomes observable behaviour. Accountability becomes measurable. Trust becomes an engineered outcome rather than an assumption.

6. Human-in-the-Loop as Operating Architecture

Human-in-the-Loop is not a review step or a compliance control. It is the operating architecture that binds ethical intent, governance control, assurance, and delivery execution into a single system of accountability.

HITL embeds human judgment directly into automated decision flows. Context preserves intent. Intervention gates enforce control. Feedback governs learning. Audit trails provide assurance. Authority for consequential decisions remains explicitly human, even when execution is automated.

Accountability is produced continuously - not reconstructed after failure.

7. Trust as an Operational and Economic Asset

In AI-enabled organisations, trust is no longer abstract. It is operational and economic.

Systems that are explainable, governable, and auditable deploy faster, face less regulatory friction, and achieve higher adoption. Human-in-the-Loop transforms trust from a promise into evidence, enabling organisations to scale autonomy without accumulating ethical debt.

Responsible AI, when operationalised correctly, becomes a source of competitive advantage rather than a compliance burden.

Conclusion: Governing Intelligence at Scale

Artificial intelligence has crossed a structural threshold. Systems now reason, generate, and act with levels of autonomy that exceed traditional governance assumptions. The defining challenge of the intelligent era is not how much autonomy machines can be given, but how deliberately humans choose to govern it.

Responsible AI failures do not arise from a lack of principles or frameworks. They arise from fragmentation. Human-in-the-Loop resolves this fragmentation by binding intent, governance, assurance, and execution into a single, auditable system.

The leaders of the intelligent era will not be those who automate the most. They will be those who govern autonomy most deliberately.

About the Author

Darin Aarons, MTM (AI), is a senior technology and product executive with over twenty-five years of global experience in transforming, designing, leading, and governing complex digital and data-driven systems across regulated industries, including financial services and large-scale enterprise transformation programmes.

He holds a Master of Technology Management with a specialization in Artificial Intelligence and operates as a practitioner-scholar at the intersection of AI governance theory and design-level implementation. Over the past several years, his work has focused on enterprise AI transformation, including translating ethical principles, regulatory expectations, and assurance standards into enforceable system controls, delivery frameworks, and operating models.

Darin has held responsibility for technology strategy, enterprise architecture, delivery governance, and control integrity, with particular emphasis on embedding explainability, traceability, Human-in-the-Loop oversight, and auditability directly into AI-enabled systems. His experience spans both the conceptual design of Responsible AI governance and its execution within live production environments where autonomy, risk, and accountability must be actively managed.

His perspective reflects sustained engagement with the practical realities of AI delivery, regulatory scrutiny, and organizational accountability, and informs the design patterns and operating models presented in this monograph.

If you have further questions, or would like to speak with Darin please get in touch.